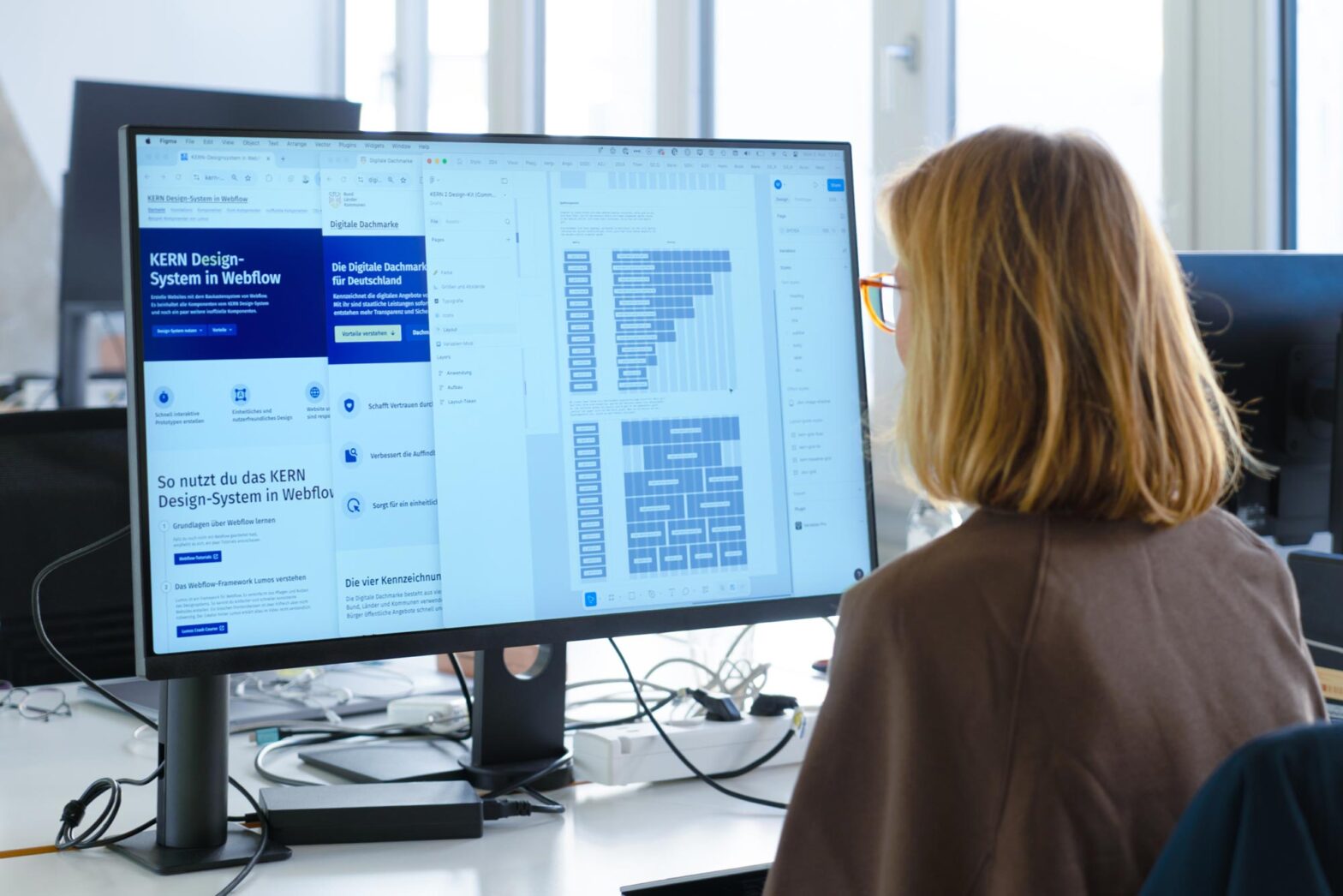

No more mess! After 5 years, we stopped developing our own design component library and design system. Instead of contributing to more fragmentation, we adopt the KERN Design System wherever possible. Services we are developing at Digital Service will use all it offers. Plus, we are contributing to its extension and further development.

Sharing our design system strategy publicly

Maintaining countless design systems across the public sector makes no sense. It doesn’t increase quality. It neither builds more trust among users nor makes financial sense. Yet it occurs throughout Germany and at all levels of government.

Internationally, colleagues have demonstrated how things can work instead. The Dutch NL Design System has been adopted by various ministries and cities like Amsterdam, Utrecht, and Rotterdam. Italy’s central design system is used all over the country, including local schools. Many federal departments and agencies in the United States have adopted the U.S. Web Design System. It is also used at the state level, such as in Iowa.

For us, the decision to stop in-house development wasn’t easy. We took our time. We hesitated at first. Our colleagues from the State of Schleswig-Holstein, the Senate Chancellery of City of Hamburg, and KERN Design System team started persuading us years ago. But we had to take a close look, and then another one. Over the years, we asked many questions and provided feedback. Then, last autumn, a small group of designers and engineers conducted an investigation into adopting an external design system. They developed criteria to judge them on. Eventually, our team thoroughly looked at the GOV.UK Design System and the KERN Design System. Both have different strengths and advantages. We decided on the homebrew option.

In the public sector, a unified design system can unlock vast benefits. The GOV.UK Design System saves the UK taxpayer approximately £36 million per year. The NHS design system reduces delivery times by up to 50%, Tero just wrote. There is ample evidence that it enhances the usability and accessibility of services.

Over the past few years, we learned that more than 30 design systems exist in the German public sector. The actual number is likely to be much higher. We, as DigitalService, taking this step isn’t making a big difference. Many others have to take it, too, including the many form builder tools procured by local and state governments. The Federal Ministry for Digital & Government Modernisation will need to play an even bigger role in this through its D-Stack (Germany stack) work.

However, styles and components aren’t enough to consistently develop good government services. That is why we looked further and started engaging with the GovStack service patterns as well. We hosted international workshops and had conversations with their expert UX working group. We are now working towards bringing patterns informed by GovStack into the KERN Design System.

The blog post about this journey is now published. It reflects the work of many. Take a look, and let us know where you stand on the journey.

Supporting local and state government in applying the Service Standard

Trialling an iterated Service Standard introduction workshop, we visited the city of Potsdam to consult with a joint team in charge of 2 services: hunting permits and fishing licenses. Together, they represented 4 different organisations – including state ministry, district government, a local government association and the state-owned IT supplier. They were interested in learning about the Service Standard and checking with us how well they are working towards meeting its points.

Robert, project lead and product manager for the Service Standard work, and I thought 3 hours were plenty. We were wrong. After providing an overview of the standard and setting the scene, we offered some individual reading time before allowing the 9 participants to look further into the 13 points of the standard and what they require teams to do.

Individually, they went on to mark each requirement of every standard point either red, amber or green. We had a group-wide playback and discussed these ratings. I had to hold back from asking all too many distracting questions as I was eager to learn about their efforts of the past 5+ years. They demonstrated considerable progress in various areas, but also have work to do. We agreed on spending more time with them remotely. I also wondered how we might be able to help, particularly with point 1, ‘Understanding users and identifying needs’. I want to explore the possibility of offering user research support.

I enjoy working with service teams. It’s what I did as a service assessor in the UK, and here I can do similar work. Hopefully, we can scale it further. It’s well worth the investment of time.

In about 1.5 weeks, I will have another opportunity to assist teams and learn more about their work, progress, and challenges. I will run a version of the workshop with service teams from the city-state of Hamburg.

Learning more about the sludge service audits

Following up on the conversation with the OECD colleagues 2 weeks ago, I had the opportunity to see the sludge audit tool in person this week.

As I had heard before, it comes with encoded rating questions for each possible step in a service user’s journey. For example, the step ‘read website’ includes rating criteria for accuracy of information, consistency, purpose, or sufficient information. All the criteria are rated on a 5-point Likert scale, ranging from ‘very difficult’ to ‘easy’.

Before, a member of the service team needs to enter each possible step in a service journey, generating a high-level journey map inside the tool. For each step, a time duration can be entered. With the combined qualitative and quantitative data, a comprehensive view of a service, and where sludge or friction exists, emerges. In the ‘Customer experience review’ screen, one can view all rated steps in an overview and also see them plotted on a 2-by-2 ‘Burden concern matrix’.

I have not seen a more detailed, data-driven approach to journey mapping so far. I am a little bit in awe. Collecting all these data points is quite a bit of work, though. Not all service teams will be able to make such an effort. However, for high-volume services, where service owners take a long-term view, this is possible, although some work is required. User researchers would have to incorporate the rating scales 1:1 into their usability tests. The results would then have to be transferred to the tool. That requires some capability that currently not many service teams in Germany have access to.

One thing that was not entirely clear from the conversations I had on the sludge audits is how many service users usually get involved. I have not heard a standard sample size. Users’ subjective assessment of the service quality is vital to make the sludge audit credible. If only service team members entered their own estimations and gut feelings, the result would be a pointless hypothesis. A team member’s guess about the accuracy, consistency, or sufficiency of information would not be very informative. It would be fluff. However, the more service teams understand about their users’ experience across all journey steps and the more measurements they have already taken, the faster such an audit can occur.

To learn more about how the tool is used in practice in other countries, I have reached out to user-centred design folks in Finland and Australia. Those are the places where most sludge audits have been performed. I will report what I find.

Discussing technology shifts in Hamburg

On Monday evening, I travelled to Hamburg to join ‘The Shift’ on Tuesday. For 2 days, the Sparkassen Innovation Hub invited executives and leaders from the 350+ private savings banks to explore various forms of innovation. I joined for the first day and gave a 25-minute input right before lunch.

Approximately 60 participants of the interactive event discussed 5 different shifts in the areas of product, customer, strategy, employees, and technology. I was invited to join the latter. I assembled a new-ish talk to reflect on how tasks, or jobs-to-be-done, remain largely the same, while the solution, and often the technology used to accomplish the job, gets replaced. I reused parts of a ‘jobs-to-be-done’ talk I wrote while at GDS, working in the Data Infrastructure Programme. I mentioned several things from that time, including GOV.UK on voice assistants and smart speakers, the passport renewal service that is utilising quite a bit of tech behind the scenes, and what we did with cryptographic features like hash trees when building the GOV.UK Registers platform.

The literal translation of my title is “of horses saddled from behind”. In German, the phrase means starting from the wrong end. The context: People begin with the technology and an idea of the solution before knowing what the problem they are trying to solve is. I mentioned our work on digital identity and the property tax declaration service. I had to bury the Service Standard somewhere and even – may people forgive me – quoted Steve Jobs: “You’ve got to start with the customer experience and work backward to the technology. You can’t start with the technology then try to figure out where to sell it.”

The talk was well received in the small-ish setup. We had time for a few questions, and I stayed until the evening for an extended exchange. As usual, my slides are on GitHub.

I found a few similarities between our government world and the sphere of the public banking group Sparkassen (which is the largest financial services group in all of Europe and goes back to 1778).

What’s next

On Thursday, I’ll travel to the state capital of Hesse, Wiesbaden. There, Public Service Lab teams up with the city and runs its annual conference day on Friday. I will deliver parts of an opening talk that cover the Service Standard. Afterwards, I will run a little workshop on the digital umbrella brand. Approximately 100 public servants, mostly from state and local government levels, will join.